DUBAI: Social media giants Twitter and Facebook have responded to criticism in the wake of Friday’s mass shootings at mosques in New Zealand after the deadly terrorist attacks were live-streamed on the platforms that collectively have billions of worldwide users.

On Friday, 49 people were killed in shootings at two mosques in central Christchurch in an attack that saw one of the perpetrators filming himself firing at worshippers – and live-stream his attack in a 17-minute video on Facebook – in addition to posting a lengthy manifesto on a Twitter account detailing racial motivations for the attack.

Social media platforms scrambled to remove video of the shootings from Facebook, Twitter and Instagram in the wake of the attack, described as “an extraordinary and unprecedented act of violence” by the country’s Prime Minister Jacinda Ardern.

A spokesperson for Twitter told Arab News that it had suspended the account in question and was “proactively working to remove the video content from the service.” Both, it said, are in violation of strict Twitter policies.

"We are deeply saddened to hear of the shootings in Christchurch,” the spokesperson said. "Twitter has rigorous processes and a dedicated team in place for managing emergency situations such as this. We will also cooperate with law enforcement to facilitate their investigations as required.”

Facebook also said in a statement it had removed the footage and was also pulling down "praise or support" posts for the shootings. It also said it alerts authorities to threats of violence or violence as soon as it becomes aware through reports or Facebook tools. The gunman who opened fire inside at one of the New Zealand mosques appeared to live-stream his attack on Facebook in a video that looked to be recorded on a helmet camera.

"New Zealand Police alerted us to a video on Facebook shortly after the live stream commenced and we removed both the shooter's Facebook account and the video," said Mia Garlick, a Facebook representative in New Zealand. "We're also removing any praise or support for the crime and the shooter or shooters as soon as we're aware. We will continue working directly with New Zealand Police as their response and investigation continues. Our hearts go out to the victims, their families and the community affected by this horrendous act."

In a tweet sent from its official account, YouTube also committed to removing all footage. "Our hearts are broken over today's terrible tragedy in New Zealand," read the statement. "Please know we are working vigilantly to remove any violent footage."

In a tweet sent from its official account, YouTube also committed to removing all footage. "Our hearts are broken over today's terrible tragedy in New Zealand," read the statement. "Please know we are working vigilantly to remove any violent footage."

Following the attack, New Zealand police have also warned against sharing online footage relating to the deadly shooting, saying in a Twitter post: "Police are aware there is extremely distressing footage relating to the incident in Christchurch circulating online.

"We would strongly urge that the link not be shared. We are working to have any footage removed.”

Despite the response, the video is out there, and experts say this is a chilling example of how social media sites are increasingly becoming a platform for terrorists to spread their hate-fueled ideology.

Following the shootings, Mosharraf Zaidi, an ex-government adviser, columnist and seasoned policy analyst who works for the policy think tank Tabadlab, tweeted: “Unbelievable that both @facebook and @twitter have failed to remove (the) video of the terrorist attack in #Christchurch. Every single view of those videos is a potential contribution to future acts of violence. These platforms have a responsibility they are failing to live up to.”

While the Facebook account that posted the video was no longer available shortly after the shooting and the Twitter account of the same name was quickly suspended, Zaidi, speaking to Arab News, said social media giants need to do more to stop their sites being platforms for terrorists.

"The quality of content filtering and management is a tricky and delicate issue. Governments routinely demand posts be taken down, which these platforms comply with. But often, when they comply, rights activists bemoan the negation of people’s freedoms.

"One of the most complex global governance challenges confronting the international community is the norms of how social media is to be regulated – with the added complexity that the objects of such norms are no longer sovereign states, but private businesses with platforms larger than most countries by population.

"I think these platforms need to spend much more of their R&D (research and development) on harm prevention and protecting their product, which is my time and your time on their platform.”

The terrorists’ attack, which Prime Minister Ardern said led to “one of New Zealand’s darkest days,” is the worst mass shooting in the country’s history and led to the arrest of four suspects – three men and a woman. One person was later released. Another, a man in his late 20s, has since been charged with murder. Australian prime minister Scott Morrison said one of the suspects in the “right-wing extremist attack” was an Australian-born citizen.

The director of the national Islamophobia monitoring service, Iman Atta of Tell MAMA (Measuring Anti-Muslim Attacks), condemned the attack, saying: "We are appalled to hear about the mass casualties in New Zealand. The killer appears to have put out a 'manifesto' based on white supremacist rhetoric which includes references to anti-Islamic comments. He mentions 'mass immigration' and 'an assault on our civilization' and makes repeated references to his 'white identity.’

"The killer also seems to have filmed the murders, adding a further cold ruthlessness to his actions. We have said time and time again that far-right extremism is a growing problem and we have been citing this for over six years now. That rhetoric is wrapped within anti-migrant and anti-Muslim sentiment.

"Anti-Muslim hatred is fast-becoming a global issue and a binding factor for extremist far-right groups and individuals. It is a threat that needs to be taken seriously.”

Zahed Amanullah, a resident senior fellow at the Institute for Strategic Dialogue, said some people who see such videos “may be inspired” to commit a similar act of terrorism.

Facebook Livestream, which the shooter appeared to use, is an “extremely difficult hole to plug,” said Amanullah. The problem with such content appearing on social media, he pointed out, is that it feeds the curiosity of online viewers. “People are curious and want to look at forbidden fruit; no matter the content,” said Amanullah. “Even people who are horrified are curious.”

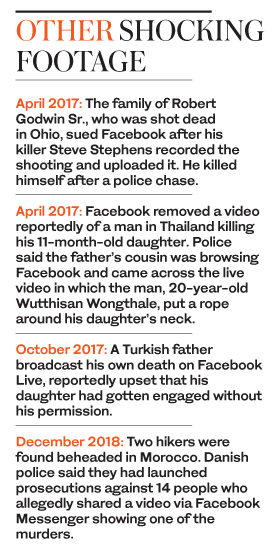

Such terrorist acts using social media platforms have already been seen in recent years, said Amanullah.

“Look at ISIS. Groups such as these have live-streamed first-person perspectives on terrorism; such extremists are producing this type of mentality online.”

Often, social media platforms struggle to contain the content online, particularly in real time. “We work very closely with companies such as Twitter and Facebook on these issues, and we have worked with them on identifying extremist content. I think they are talking it seriously and are reacting as quick as they can. In this instance, the video was removed in minutes. The challenge here of intercepting something being live-streamed is extremely difficult, where it is a terrorist attack, or other incidents we have seen such as suicides.”

While people “want the bad guys and extremists offline,” Amanullah said, at present, the only way to completely prevent history repeating itself is to step up surveillance.

“This is a product of a social media age where it is so easy to broadcast what you are doing – and we might have to accept this will happen again.”