DUBAI: Earlier this year, former Facebook employee Frances Haugen leaked internal company documents, and accused the social media giant of prioritizing profit over safety by failing to adequately tackle the spread of misinformation and harmful content.

The whistleblower also said that 87 percent of misinformation spending is dedicated to English speakers, although only 9 percent of Facebook users are English speakers.

Now, Facebook’s parent company Meta has developed a new artificial intelligence system called “few-shot learner (FSL)” that it claims is faster, more efficient and works in over 100 languages, including Arabic.

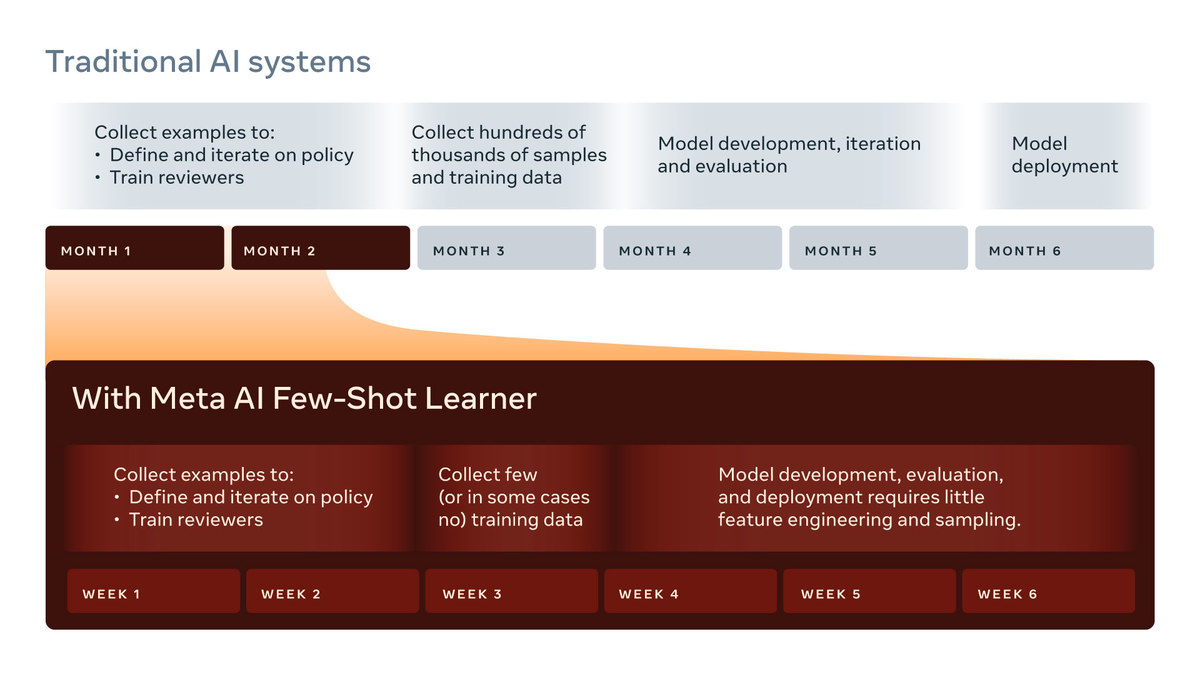

“AI needs to learn what to look for and it typically takes several months to collect and label thousands, if not millions, of examples necessary to train each individual AI system to spot a new type of content,” the company said in a blog post.

The new FSL system works within weeks, instead of months, by learning from different kinds of data, such as images and text. It does this through a system called “few-shot learning,” wherein the program starts with a broad understanding of various topics and then narrows it down to fewer examples to learn new tasks.

“By leveraging few-shot mode early in the process, we can find data (samples) to bootstrap the model more efficiently, allowing us to thus label more efficiently. These samples can then get fed back into FSL, and as FSL sees more such samples, it gets better and better,” a Meta spokesperson told Arab News.

So far, the new model has been tested on only a few problems. Although it is trained on all the integrity-violating policies that are a part of Meta’s community standards, it has been deployed on select events such as misleading information around COVID-19 and hostile speech related to bullying, harassment, violence and incitement, the spokesperson added.

According to initial tests, the company said, the new AI system was able to correctly detect posts that traditional systems may miss and helped reduce the prevalence of harmful content. Meta is currently working on additional tests to improve the system.

“We have seen that in combination with existing classifiers along with efforts to reduce harmful content, ongoing improvements in our technology and changes we made to reduce problematic content in News Feed, FSL has helped reduce the prevalence of other harmful content like hate speech,” said the spokesperson.

The FSL model shows just how reliant Facebook, now Meta, is on AI. In its latest earnings call, the company said that it expected capital expenditures of $29 billion to $34 billion next year compared with $19 billion this year.

David Wehner, chief financial officer of Meta, said that a large factor driving this increase is “an investment in our AI and machine learning capabilities, which we expect to benefit our efforts in ranking and recommendations for experiences across our products, including in feed and video, as well as improving ads performance and relevance.”

Although FSL is in its early stages, “these early production results are an important milestone that signals a shift toward more intelligent, generalized AI systems that can quickly learn multiple tasks at once,” Meta said in the blog post, adding that the company’s long-term vision is to achieve “human-like learning flexibility and efficiency.”