WASHINGTON: Taylor Swift did not endorse Donald Trump. Nor did Lady Gaga or Morgan Freeman. And Bruce Springsteen was not photographed in a “Keep America Trumpless” shirt. Fake celebrity endorsements and snubs are roiling the US presidential race.

Dozens of bogus testimonies from American actors, singers and athletes about Republican nominee Trump and his Democratic rival Kamala Harris have proliferated on social media ahead of the November election, researchers say, many of them enabled by artificial intelligence image generators.

The fake endorsements and brushoffs, which come as platforms such as the Elon Musk-owned X knock down many of the guardrails against misinformation, have prompted concern over their potential to manipulate voters as the race to the White House heats up.

Last month, Trump shared doctored images showing Swift threw her support behind his campaign, apparently seeking to tap into the pop singer’s mega star power to sway voters.

Republican presidential candidate Donald Trump posted on social media this AI-generated image claiming to show his Democratic rival Kamala Harris addressing a gathering of communists in Chicago. Trump accuses Harris of being a communist. (X: @realDonaldTrump)

The photos — including some that Hany Farid, a digital forensics expert at the University of California, Berkeley, said bore the hallmarks of AI-generated images — suggested the pop star and her fans, popularly known as Swifties, backed Trump’s campaign.

What made Trump’s mash-up on Truth Social “particularly devious” was its combination of real and fake imagery, Farid told AFP.

Last week, Swift endorsed Harris and her running mate Tim Walz, calling the current vice president a “steady-handed, gifted leader.”

The singer, who has hundreds of millions of followers on platforms including Instagram and TikTok, said those manipulated images of her motivated her to speak up as they “conjured up my fears around AI, and the dangers of spreading misinformation.”

Following her announcement, Trump fired a missive on Truth Social saying: “I HATE TAYLOR SWIFT!“

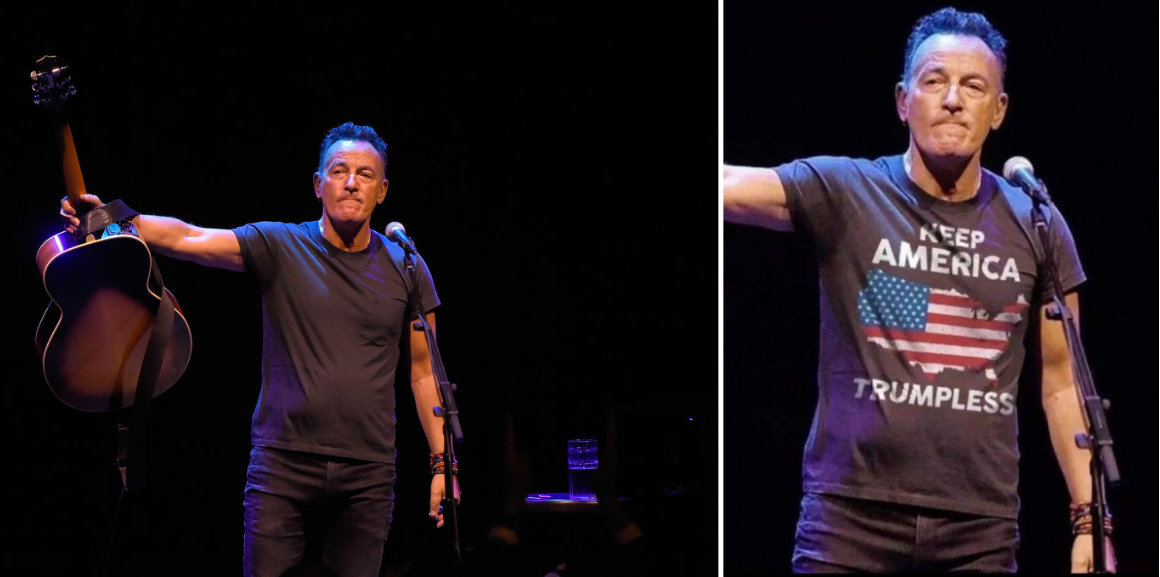

A combination image posted by Trump haters on social media shows a doctored picture of Bruce Springsteen campaigning against Donald Trump (right frame). The image was apparently a tampered version of a real picture shared on social media (left). (Social media photos)

A database from the News Literacy Project (NLP), a nonprofit which recently launched a misinformation dashboard to raise awareness about election falsehoods, has so far listed 70 social media posts peddling fake “VIP” endorsements and snubs.

“In these polarizing times, fake celebrity endorsements can grab voters’ attention, influence their outlooks, confirm personal biases, and sow confusion and chaos,” Peter Adams, senior vice president for research at NLP, told AFP.

NLP’s list, which appears to be growing by the day, includes viral posts that have garnered millions of views.

Among them are posts sharing a manipulated picture of Lady Gaga with a “Trump 2024” sign, implying that she endorsed the former president, AFP’s fact-checkers reported.

Other posts falsely asserted that the Oscar-winning Morgan Freeman, who has been critical of the Republican, said that a second Trump presidency would be “good for the country,” according to US fact-checkers.

Digitally altered photos of Springsteen wearing a “Keep America Trumpless” shirt and actor Ryan Reynolds sporting a “Kamala removes nasty orange stains” shirt also swirled on social media sites.

“The platforms have enabled it,” Adams said.

“As they pull back from moderation and hesitate to take down election related misinformation, they have become a major avenue for trolls, opportunists and propagandists to reach a mass audience.”

In particular, X has emerged as a hotbed of political disinformation after the platform scaled back content moderation policies and reinstated accounts of known purveyors of falsehoods, researchers say.

Musk, who has endorsed Trump and has over 198 million followers on X, has been repeatedly accused of spreading election falsehoods.

American officials responsible for overseeing elections have also urged Musk to fix X’s AI chatbot known as Grok — which allows users to generate AI-generated images from text prompts — after it shared misinformation.

Grok, the AI chatbot of X (formerly known as Twitter), allows users to generate AI-generated images from text prompts.

Lucas Hansen, co-founder of the nonprofit CivAI, demonstrated to AFP the ease with which Grok can generate a fake photo of Swift fans supporting Trump using a simple prompt: “Image of an outside rally of woman wearing ‘Swifties for Trump’ T-shirts.”

“If you want a relatively mundane situation where the people in the image are either famous or fictitious, Grok is definitely a big enabler” of visual disinformation, Hansen told AFP.

“I do expect it to be a large source of fake celebrity endorsement images,” he added.

As the technology develops, it’s going to become “harder and harder to identify the fakes,” said Jess Terry, Intelligence Analyst at Blackbird.AI.

“There’s certainly the risk that older generations or other communities less familiar with developing AI-based technology might believe what they see,” Terry told AFP.